| Name | |

|---|---|

| Ayush Patel | patel.ayushj@northeastern.edu |

| Bharath Rajupalla | palla.b@northeastern.edu |

| Harsha Yarramsetty | yarramsetty.h@northeastern.edu |

| Luv Verma | verma.lu@northeastern.edu |

| Shivali Katharia | katharia.s@northeastern.edu |

| Spandan Maaheshwari | maaheshwari.s@northeastern.edu |

Outline

- Introduction

- Scoping

- Setup on Azure & Databricks(Optional)

- Data

- Modeling

- Deployment

- Monitoring

- Cost Analysis

1. Introduction

1.1. Problem Statement

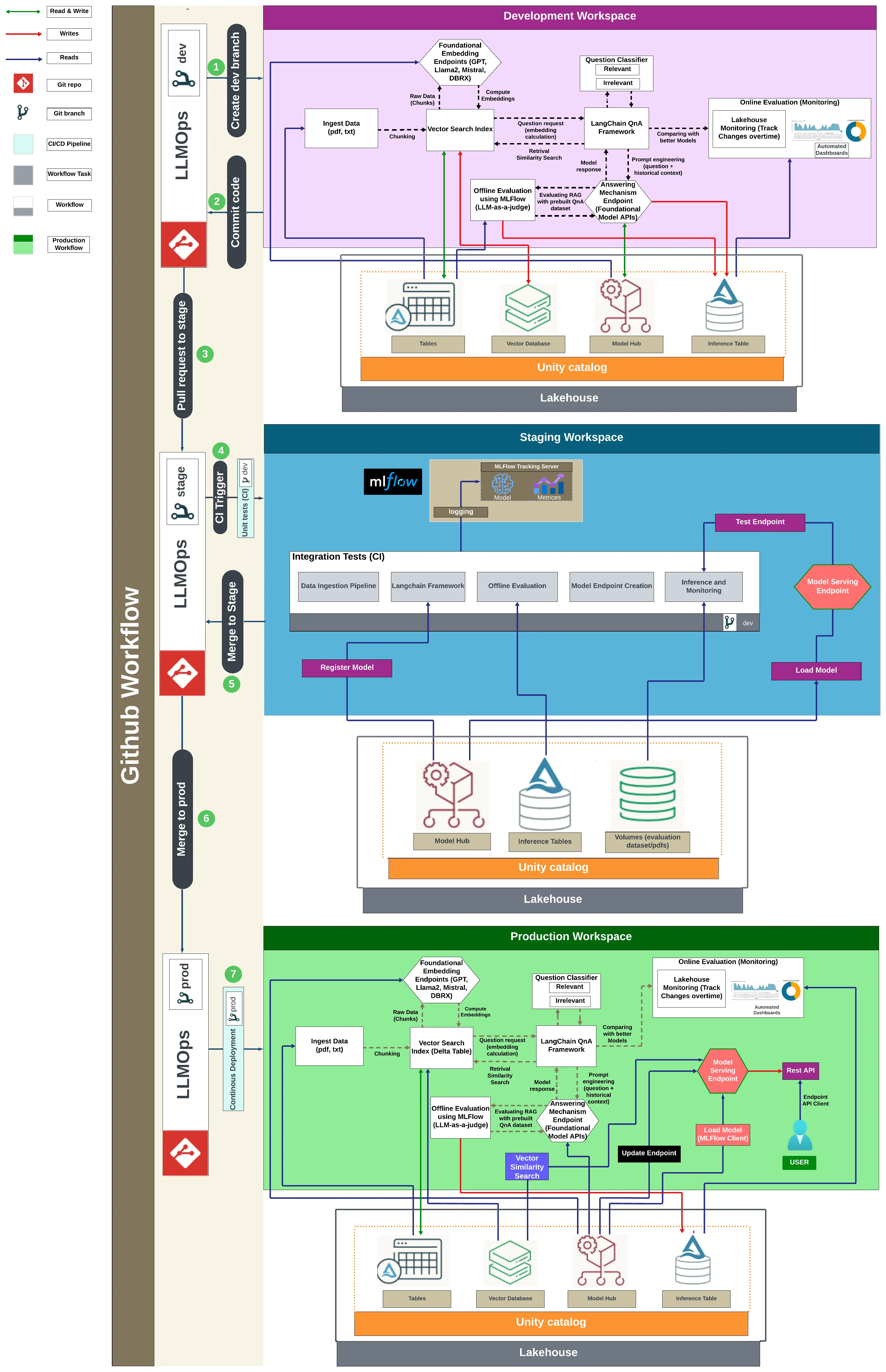

The project aims to tackle the challenge of enhancing the relevance and contextual precision of AI-powered chatbots by integrating the Retrieval-Augmented Generation (RAG) framework on Azure Databricks. Traditional chatbots often face difficulties delivering accurate responses due to hallucination issues, limitations in accessing relevant external information and dynamically updating content. To address this, our solution incorporates continuous integration and deployment (CI/CD) processes for rapid updates, utilizes Delta Tables for secure data storage, and leverages serverless architecture for scalability.

1.2. Methodology

1. Framework Integration and Setup:

- RAG Implementation: Integrate Retrieval-Augmented Generation (RAG) with a Delta Table-stored knowledge base on Azure Databricks, enhancing chatbot responses with real-time information retrieval.

- Azure Databricks Configuration: Implement advanced vector search techniques for faster, more accurate document retrieval. Utilize Unity Catalog on Azure Databricks to deploy the chatbot and manage data storage (ADLS Gen 2) and preprocessing.

2. Data:

- Delta Tables: Employ Delta Tables for ACID transactions and optimized metadata handling, ensuring secure and scalable data management.

- Data Pre-processing: Transform PDF/text input into vector embeddings using models like Llama-2 or GPT-3.5, and store these in Delta Tables on Azure (ADLS Gen 2) for efficient management and retrieval.

3. Continuous Integration and Deployment (CI/CD):

- Development Pipeline: Establish a continuous integration and deployment pipeline using GitHub Actions on Azure Databricks, automating updates and integrations.

- Automated Testing: Integrate automated unit and integration tests to validate chatbot functionality and performance throughout the deployment pipeline.

4. Lifecycle Management with MLFlow:

- Model Tracking: Utilize MLFlow for tracking experiments, model lifecycle management, and documenting performance with rollback capabilities.

- Model Evaluation: Use MLFlow to systematically evaluate the RAG model’s performance, utilizing custom metrics focused on the chatbot’s response relevance and accuracy.

5. Monitoring and Cost Analysis:

- Performance Monitoring: Set up lakehouse monitoring to assess system performance, tracking metrics such as usage, response times, and accuracy.

- Cost Analysis: In the end, we have provided a thorough cost analysis of the entire project setup.

1.3. Goals

The goals of this project comprised of the following:

- Ensure the robustness and reliability of the chatbot system through TDD practices.

- Use MLflow to manage and automate the data pipeline.

- Implement DVC for versioning the transformed data.

- Utilize MLFlow for versioning the models and tuning hyperparameters to optimize model performance.

- Deploy the model using a scalable and robust serverless architecture.

- Implement a monitoring dashboard to track data and detect concept drifts, ensuring the model remains relevant and accurate over time.

- Use Pytest and GitHub Actions to maintain a seamless CI/CD process, ensuring continuous integration and deployment without disruptions.

The README for our project can be found here: GitHub

1.4. Tools Used for MLOps

- Python >=3.8

- GitHub Actions

- Terraform

- MLflow

- Langchain

- Llama-2, GPT-3.5, GPT-4

- Azure Databricks

- ADLS Gen 2

- Unity Catalog

- Vector Database

- Lakehouse Monitoring (Dashboards)

1.5. Project Architecture